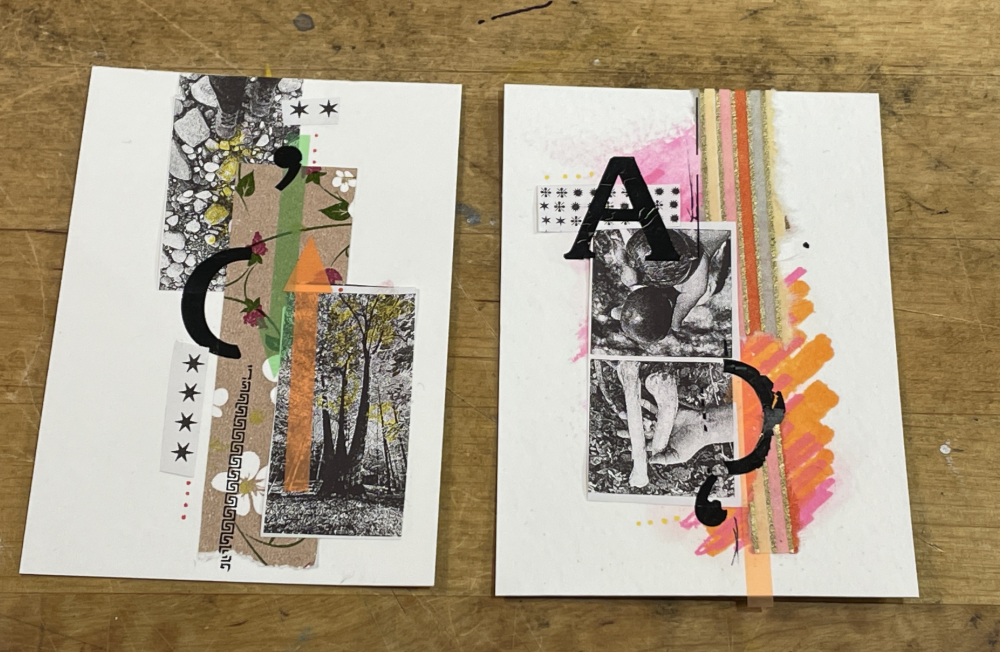

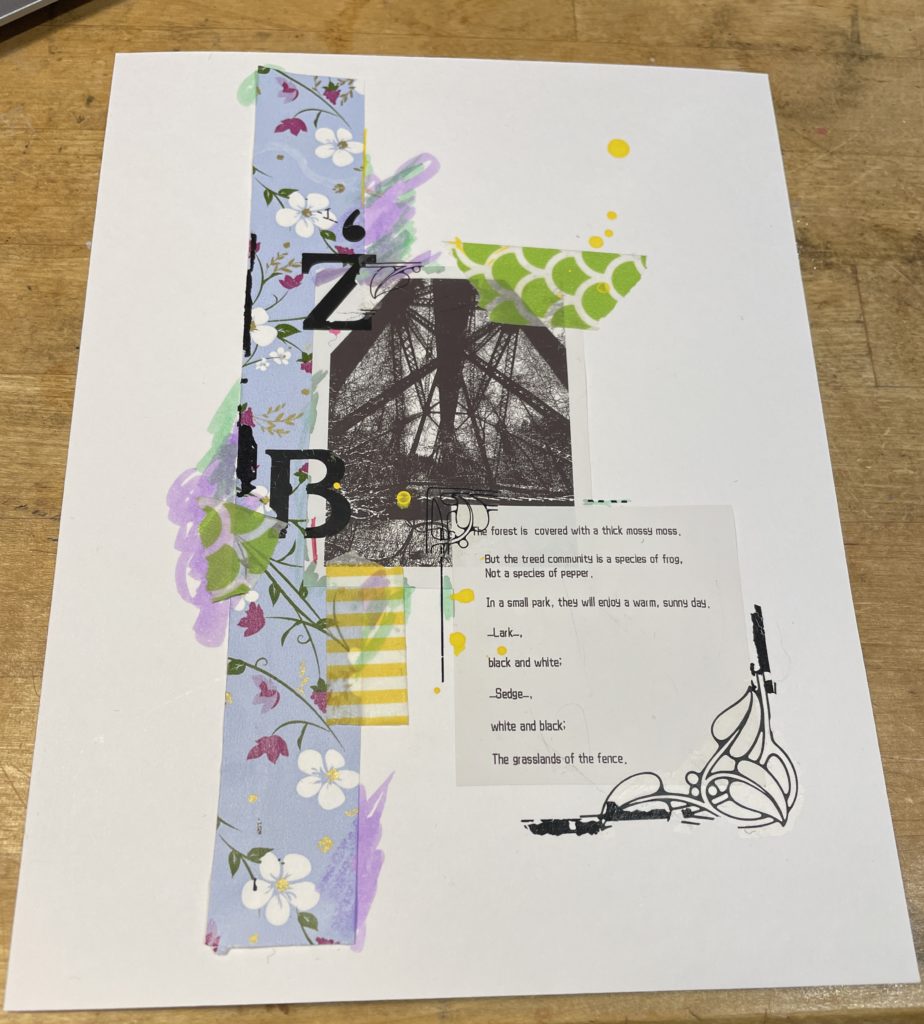

So I got into a small residency with UKAI, which is a Toronto based arts group looking at AI, and structures to support art in a new kind of future. Its been good to be in this world again, albeit I don’t have the same capacity as I used to have. For this one I knew I wanted to work with cameras on some level. I had this idea a while ago of security cameras that look at boring stuff only, as a continuation of some of the themes on autonomy or useless systems that I was poking in 2019, but that I also looked at in 2022.

In 2022 I went to Stratford and did a mini weekend residency where I made a bunch of autonomous zooms called In Camera Meeting, where different tablets watched a movie together. And in another piece I mounted many tablets in a room, but if someone approached one they would see themselves from a different camera point of view. So it was really about the device perspective.

This post is a bit late, I wanted to write it when I started, but I’m not always great at timing things. Anyways, I spent about 2 weeks experimenting w/ cheap cameras, and setting up a network, and its since evolved from “looking at boring corners” to “looking at one’s self”, but the one here is still the device. And so I’m kind of working in a space where the camera devices I have are not interested in people, even tho sometimes people are around. Anyways I’m going to write two more posts at least, one is for where things are at right now, and the other being about just loose concepts and theory.