So I’ve had some good raw generations. The process I’m doing here is a variation on the process I had in 2019. I start with a photo I’ve taken, and I feed that into a caption generator and a prompt generator (I might add a third caption generator to the list like Flickr8 but CLIP Prefix / Interrogator has been decent). I play with the settings available to me, generally temperature and top_k until I get something back that I think is interesting.

I run the same prompt text several times to get back a bulk of output. I then look through that raw output and pick out a few specific lines, which I then re-feed back into GPT-Neo. Again I adjust the settings until I feel something interesting comes back. I might do this 2 or 3 times. After I comb through all the output and start editing things into small vignettes. I found that using the single line captions from clip prefix, or editing a sentence together from Interrogator worked better as GPT-Neo prompts that plunking in whole word salads.

One thing I’ve noticed is that I didn’t need as much data as I thought I would for this process. In my original write up I was going to use my journals, and planning docs, and caption output, and I still might…because I spent the time sorting them, but I have a lot of good material to work with just out of prompting GPT-Neo with captions and I’m starting to feel that including too much extra text would just dilute my process.

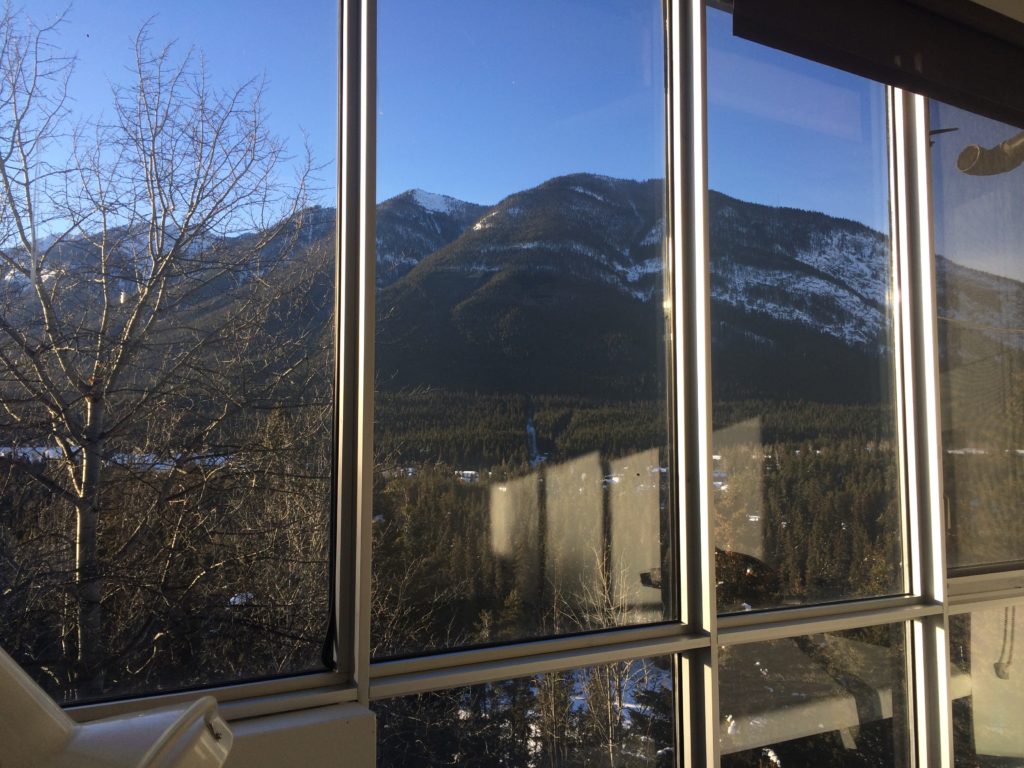

I also found I didn’t need as many photos as I thought I would, mostly because I’m doing a manual process vs fine-tuning or something more automated. So part of this project has been just sorting. Sorting my photos, manually deciding what to keep, what to use, what to discard. Sorting through planning docs and picking out specific phrases. Sorting through raw output text.

Its like excavation of my personal archive. Its enjoyable, and I feel its a deliberately slow way of working during a time when so many things want to go FAST. Maybe something to consider going from here is how to develop more methodologies to work slowly with AI.