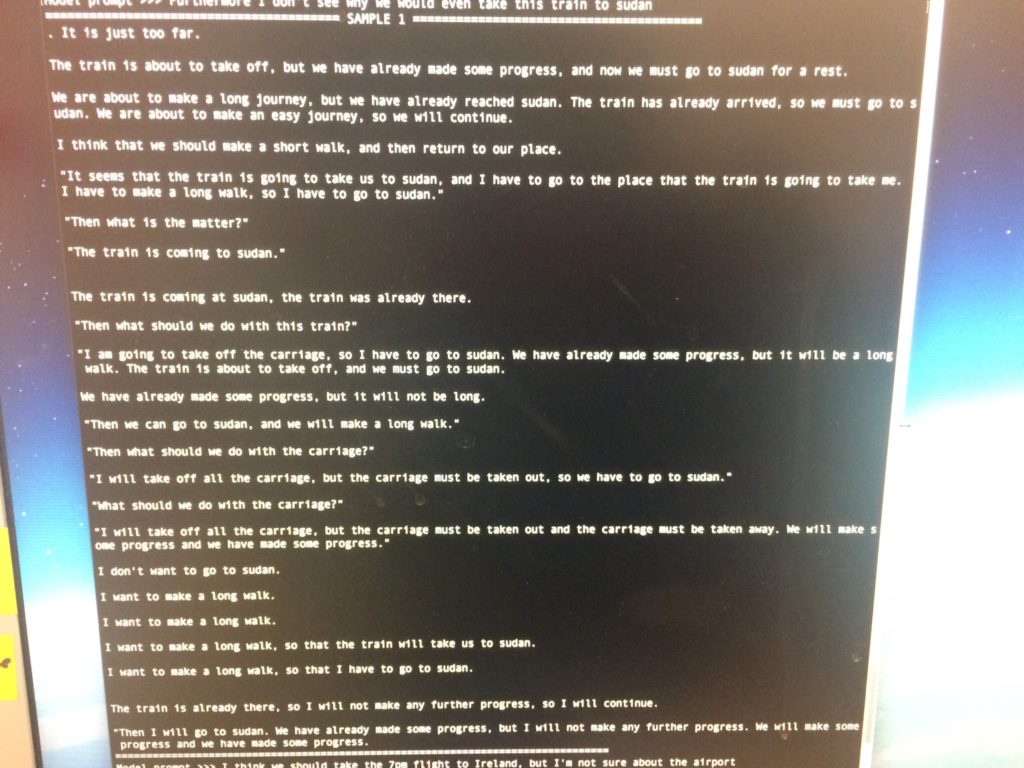

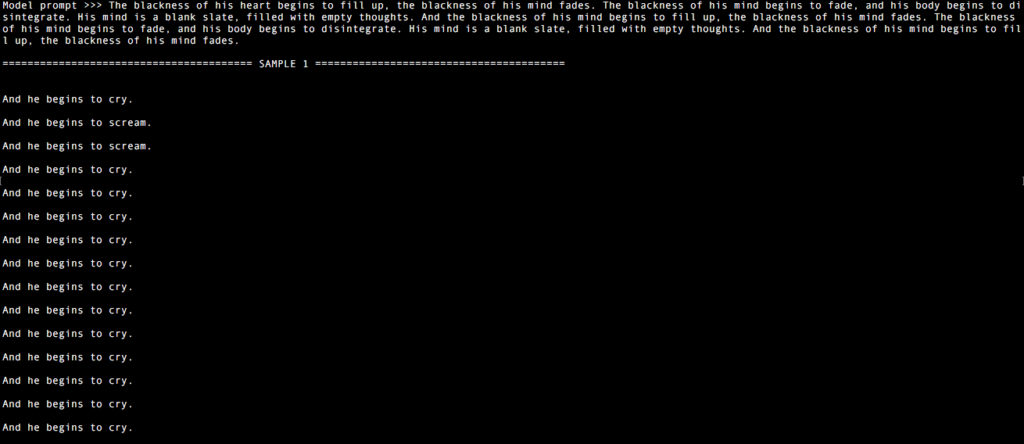

So I’ve been experimenting with some machine learning lately, and also learning about how NLP works. I’m not that far in, but I’ve been working on a new project just dubbed Fixations. When I was away, I started reading about Belief-Desire-Intent systems, and while that’s been somewhat replaced by Machine Learning and Deep Learning, I really liked the thought of a bot getting “stuck” in a repetitive loop as it tries to consistently re-evaluate what its doing. This lead me to start thinking about text loops, and GPT-2 had just recently been thrown into the spotlight so I decided to experiment with it.

I was mostly looking for ways to back it into a corner, or just play with the available variable adjustments vs trying to train it on something. And lo, I found I could make to do some interesting patterns by just methodically trying different things.

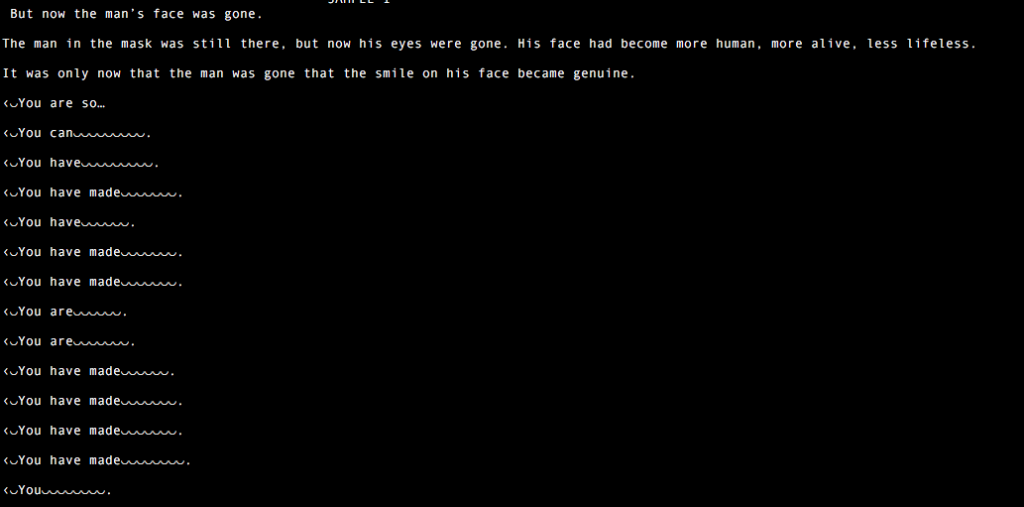

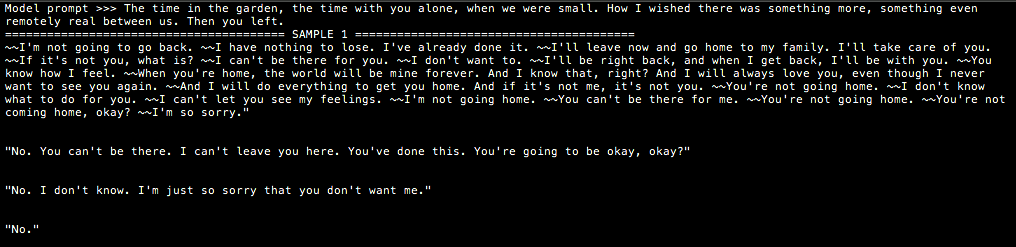

My source material was mostly bits of fan-fic [no I’m not telling you which ones ;)], and the returns were really interesting. Sometimes I would feed its already generated blocks back into itself, and its been neat to see what it latches on to, and what it repeats. I really like how deterministic it gets in its predictions.

I even started using it to generate patterns out of just ASCII / symbols. I’m starting to wonder if I can train it on only symbols just to see where it goes, or if it even makes sense.

Anyways, this is what I’ve been up to lately. I’m looking at translating these into some form of printed matter, and continuing to learn about NLP concepts.